[1.3.2] Function Vectors & Model Steering

Please send any problems / bugs on the #errata channel in the Slack group, and ask any questions on the dedicated channels for this chapter of material.

If you want to change to dark mode, you can do this by clicking the three horizontal lines in the top-right, then navigating to Settings → Theme.

Links to all other chapters: (0) Fundamentals, (1) Transformer Interpretability, (2) RL.

Introduction

These exercises serve as an exploration of the following question: can we steer a model to produce different outputs / have a different behaviour, by intervening on the model's forward pass using vectors found by non gradient descent-based methods?

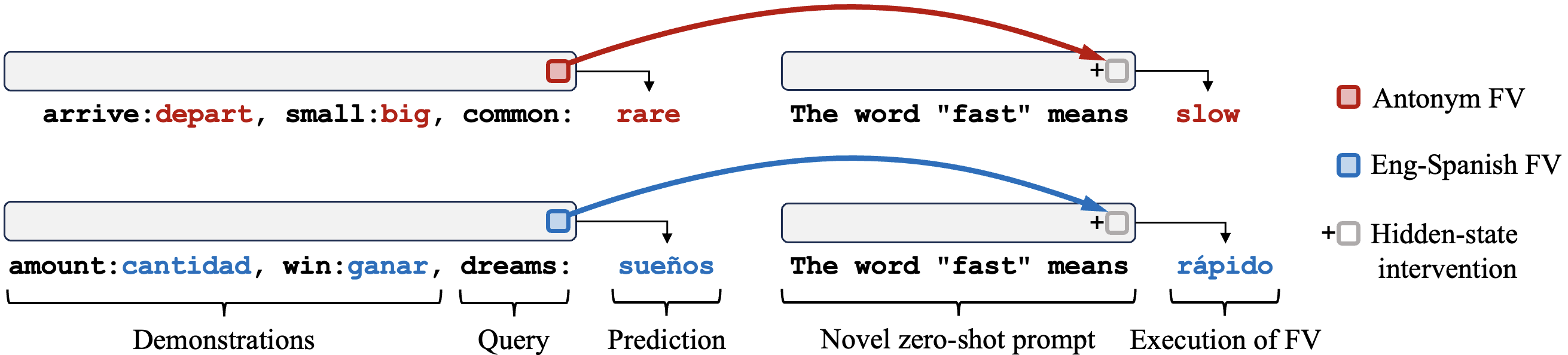

The majority of the exercises focus on function vectors: vectors which are extracted from forward passes on in-context learning (ICL) tasks, and added to the residual stream in order to trigger the execution of this task from a zero-shot prompt. The diagram below illustrates this.

The exercises also take you through use of the nnsight library, which is designed to support this kind of work (and other interpretability research) on very large language models - i.e. larger than models like GPT2-Small which you might be used to at this point in the course.

The final set of exercises look at Alex Turner et al's work on steering vectors, which is conceptually related but has different aims and methodologies.

Content & Learning Objectives

1️⃣ Introduction to nnsight

In this section, you'll learn the basics of how to use the nnsight library: running forward passes on your model, and saving the internal states. You'll also learn some basics of HuggingFace models which translate over into nnsight models (e.g. tokenization, and how to work with model output).

Learning Objectives

- Learn the basics of the

nnsightlibrary, and what it can be useful for- Learn some basics of HuggingFace models (e.g. tokenization, model output)

- Use it to extract & visualise GPT-J-6B's internal activations

2️⃣ Task-encoding hidden states

We'll begin with the following question, posed by the Function Vectors paper:

When a transformer processes an ICL (in-context-learning) prompt with exemplars demonstrating task $T$, do any hidden states encode the task itself?

We'll prove that the answer is yes, by constructing a vector $h$ from a set of ICL prompts for the antonym task, and intervening with our vector to make our model produce antonyms on zero-shot prompts.

This will require you to learn how to perform causal interventions with nnsight, not just save activations.

(Note - this section structurally follows section 2.1 of the function vectors paper).

Learning Objectives

- Understand how

nnsightcan be used to perform causal interventions, and perform some yourself- Reproduce the "h-vector results" from the function vectors paper; that the residual stream does contain a vector which encodes the task and can induce task behaviour on zero-shot prompts

3️⃣ Function Vectors

In this section, we'll replicate the crux of the paper's results, by identifying a set of attention heads whose outputs have a large effect on the model's ICL performance, and showing we can patch with these vectors to induce task-solving behaviour on randomly shuffled prompts.

We'll also learn how to use nnsight for multi-token generation, and steer the model's behaviour. There exist exercises where you can try this out for different tasks, e.g. the Country-Capitals task, where you'll be able to steer the model to complete prompts like "When you think of Netherlands, you usually think of" by talking about Amsterdam.

(Note - this section structurally follows sections 2.2, 2.3 and some of section 3 from the function vectors paper).

Learning Objectives

- Define a metric to measure the causal effect of each attention head on the correct performance of the in-context learning task

- Understand how to rearrange activations in a model during an

nnsightforward pass, to extract activations corresponding to a particular attention head- Learn how to use

nnsightfor multi-token generation

4️⃣ Steering Vectors in GPT2-XL

Here, we discuss a different but related set of research: Alex Turner's work on steering vectors. This also falls under the umbrella of "interventions in the residual stream using vectors found with forward pass (non-SGD) based methods in order to alter behaviour", but it has a different setup, objectives, and approach.

Learning Objectives

- Understand the goals & main results from Alex Turner et al's work on steering vectors

- Reproduce the changes in behaviour described in their initial post

☆ Bonus

Lastly, we discuss some possible extensions of function vectors & steering vectors work, which is currently an exciting area of development (e.g. with a paper on steering Llama 2-13b coming out as recently as December 2023).

Setup code

import logging

import os

import sys

import time

from collections import defaultdict

from pathlib import Path

import circuitsvis as cv

import einops

import numpy as np

import torch as t

from IPython.display import display

from jaxtyping import Float

from nnsight import CONFIG, LanguageModel

from openai import OpenAI

from rich import print as rprint

from rich.table import Table

from torch import Tensor

# Hide some info logging messages from nnsight

logging.disable(sys.maxsize)

t.set_grad_enabled(False)

device = t.device(

"mps" if t.backends.mps.is_available() else "cuda" if t.cuda.is_available() else "cpu"

)

# Make sure exercises are in the path

chapter = "chapter1_transformer_interp"

section = "part42_function_vectors_and_model_steering"

root_dir = next(p for p in Path.cwd().parents if (p / chapter).exists())

exercises_dir = root_dir / chapter / "exercises"

section_dir = exercises_dir / section

if str(exercises_dir) not in sys.path:

sys.path.append(str(exercises_dir))

import part42_function_vectors_and_model_steering.solutions as solutions

import part42_function_vectors_and_model_steering.tests as tests

from plotly_utils import imshow

MAIN = __name__ == "__main__"